This blog post describes a recent project where I coded a simple automated bot that can play the NES/Famicom version of Galaga with a proficiency that is at least superior to beginners. I am planning to release the code as open source eventually and will update this blog post when that is done

Background

I was inspired by an article about the CastlevaniaBot describing (in wonderful detail) one man’s quest to build a bot that can finish Castlevania I on NES. I learned that the project was based on coding a plugin in Java for a NES emulator called Nintaco (which I didn’t know existed at the time). I’ve always been fascinated by simulations and automation and started wondering if I could write a similar project myself.

The Castlevania project has some very ambitious code to deal with path finding through the levels and making sure the player character doesn’t die by falling into any pits (anyone who has played Castlevania knows how frequently this happens). I definitely wanted to avoid doing anything that had to deal with jumping on platforms, so I started thinking about some of the older and simpler NES games. Pretty soon Galaga popped into my head. It felt quite feasible since there are no platforms, all stages are single screens only, and you only have three controls to worry about; left, right and fire.

I downloaded Nintaco and started exploring its API. I didn’t feel excited at the prospect of coding in Java or Scala (my two most well known JVM languages) though. I decided to try out Kotlin instead, and this small project turned out to be great for getting into it.

First steps

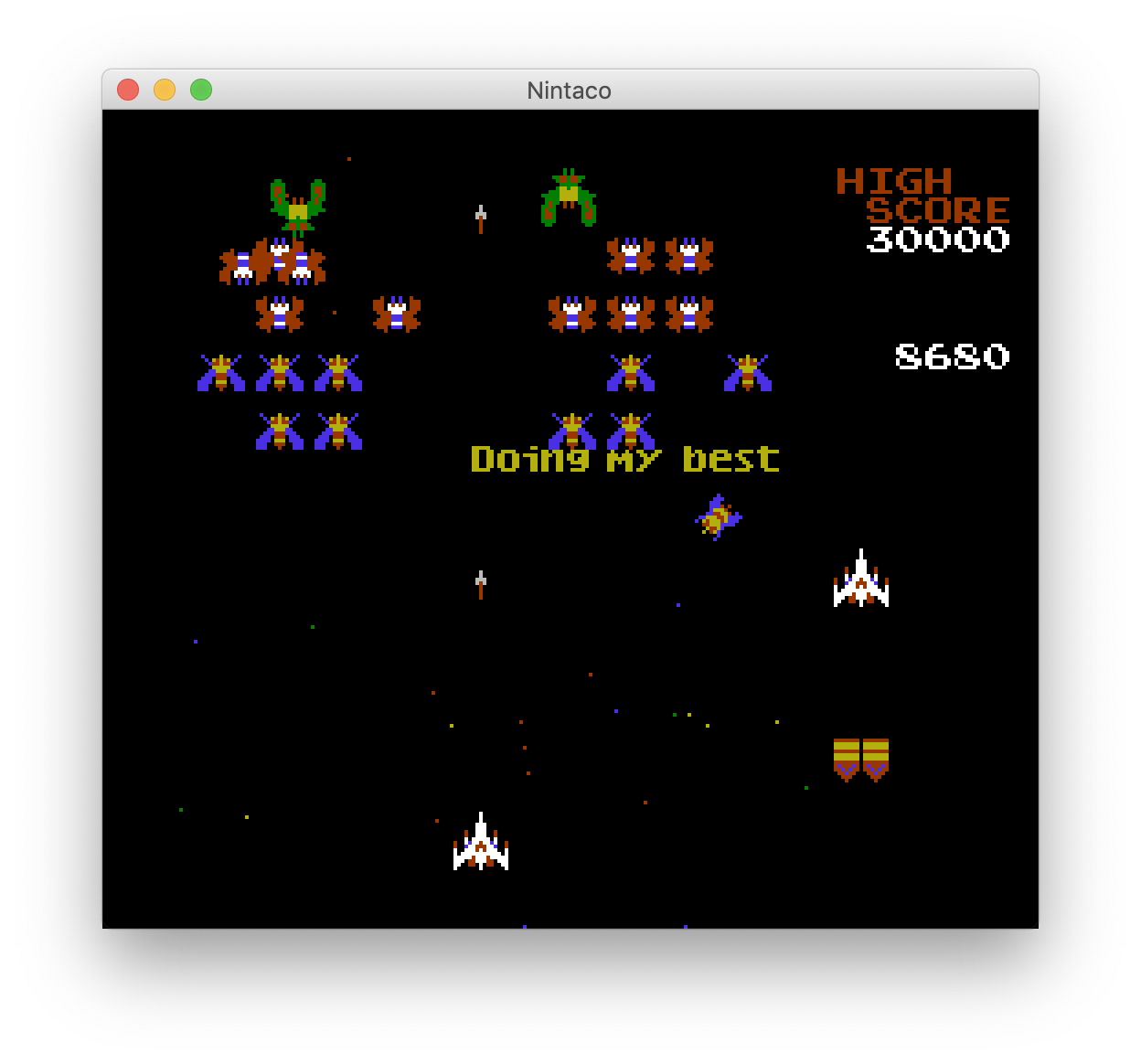

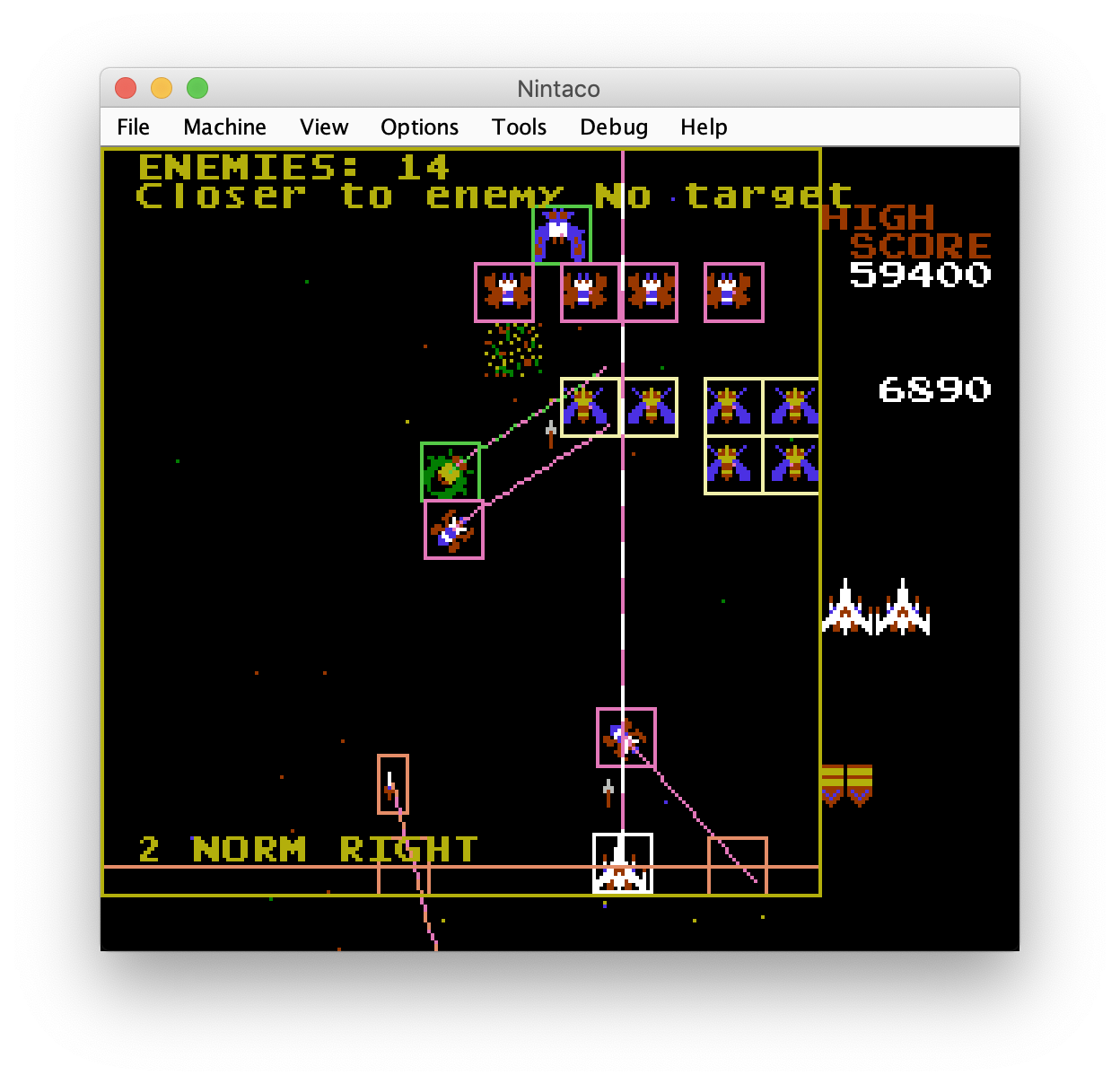

I started by just trying to figure out how to feed input into the emulator. It didn’t take long before I had an initial proof-of-concept where the player ship was just staying in place in the middle of the screen and shooting like a madman. I also found that the Nintaco API allows you to overlay text on the screen. I used this to print the message you see in the screen shot below. This most stupid of bots was already able to sometimes get lucky and score around 10k using this strategy.

Giving vision to the bot

Next I started digging in to how I could get my bot to understand what was going on in the game. Nintaco has multiple features for checking what the NES CPU is doing and what the memory contents are.

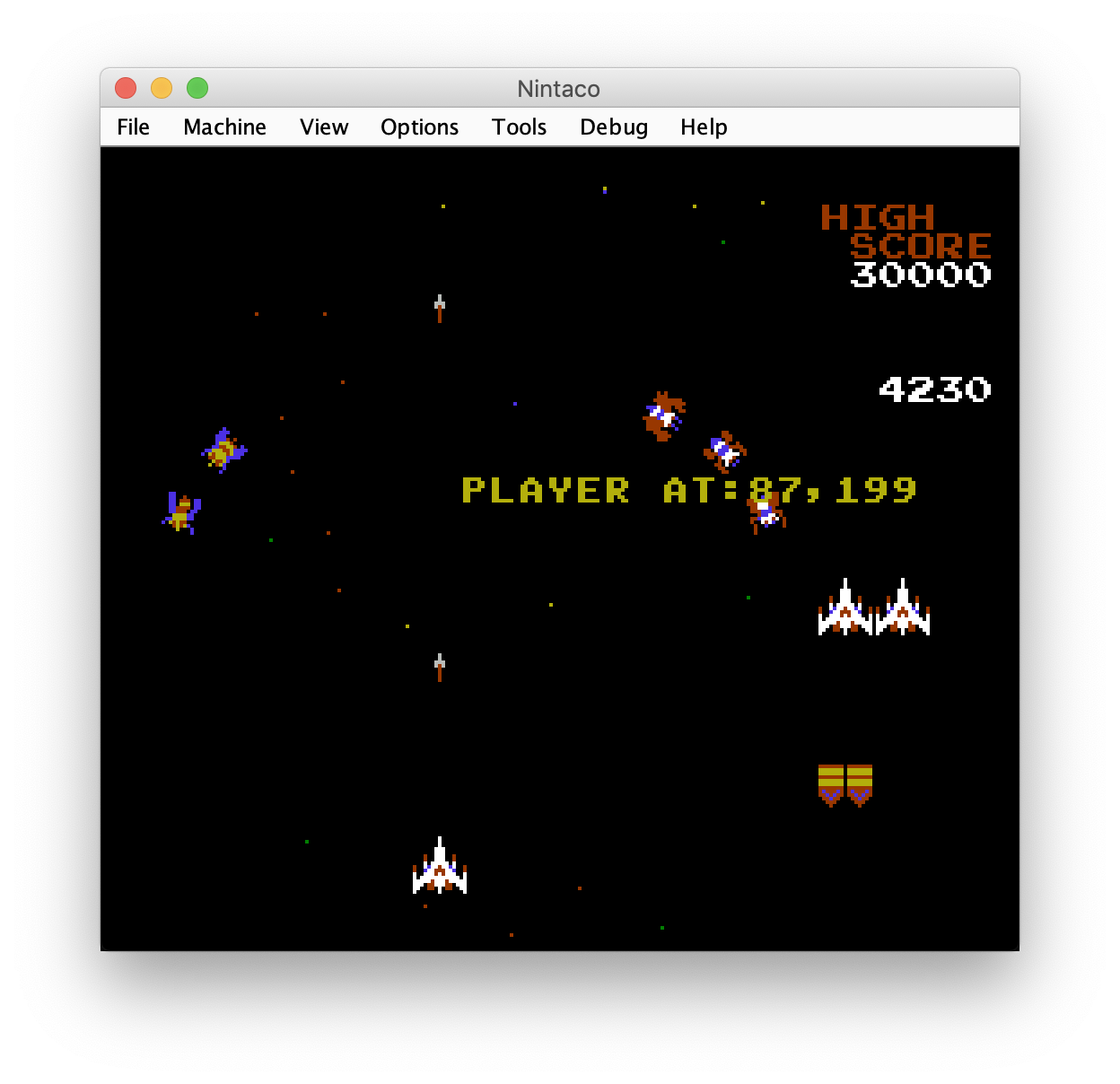

Initially I briefly looked at the assembly source code for the game, but my knowledge of NES hardware was a little bit too lacking to get anywhere with this approach. Next I opened up the RAM explorer to try to see if I could figure out where the X and Y coordinates of the player and enemies were. Finding the player’s X and Y coordinates was pretty simple using the watch features so I made the emulator print that to the screen.

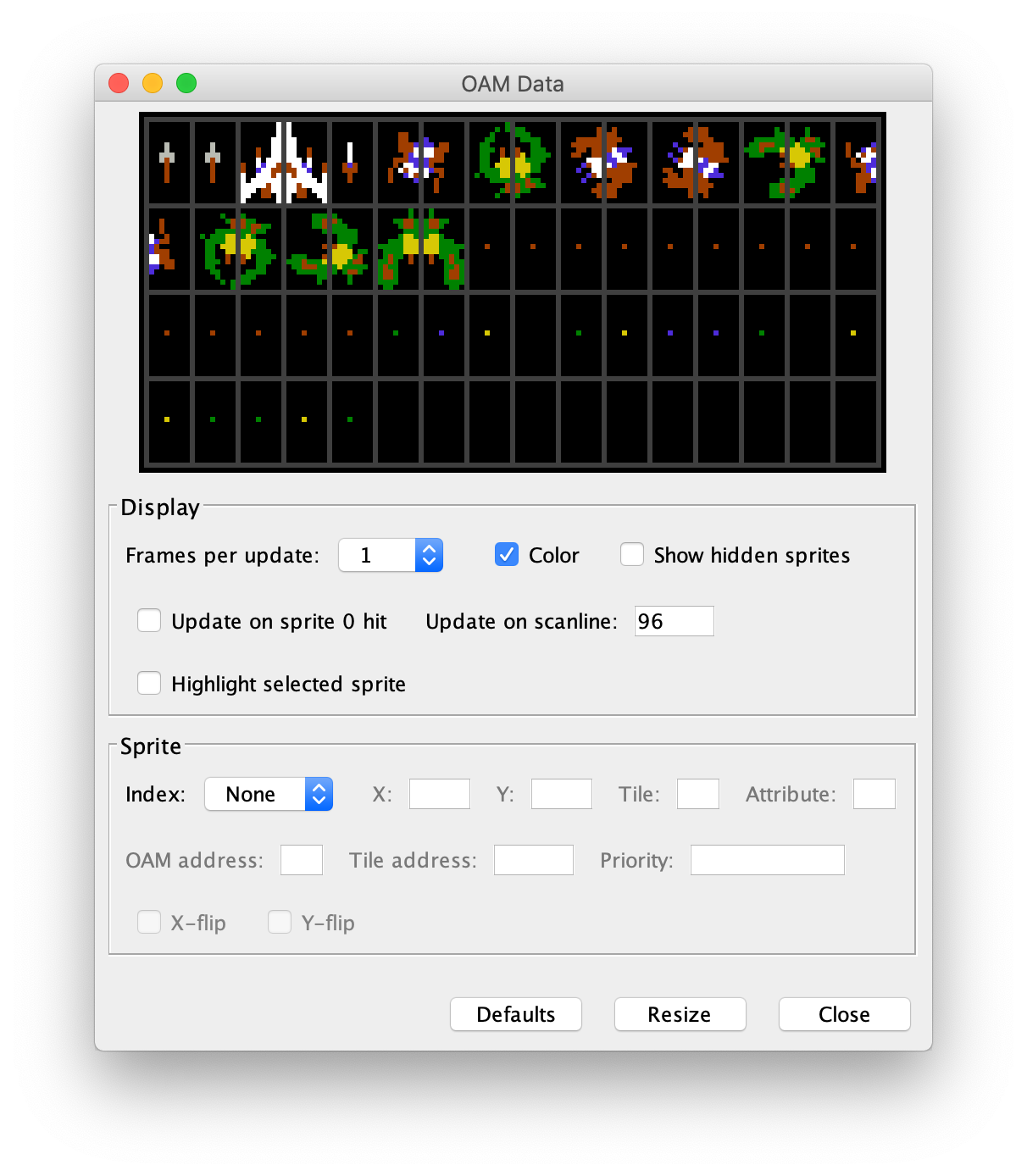

Next I tried to see if I could find the X and Y coordinates of the enemies. But the memory was changing a bit too “randomly” for me to make sense of it. I went back to the CastlevaniaBot approach and noticed that it was also not relying on the RAM, but instead was reading the enemy locations by looking at the OAM (Object Attribute Memory).

OAM is a list of the current sprites (game characters) that will be rendered on to the screen by the NES as part of drawing the current game screen. By pausing the game in the emulator I could click a sprite in the OAM table and take a look at its attributes. This way I could see which graphics tile (referred to by a simple number pointing to another graphics tile table) was drawn at which position. This gave me my initial foothold to start figuring out how to find the locations of the enemies.

Unexpected complications with the enemies

There were a couple of unexpected complications I had to deal with when finding the locations of the enemies. One of them was that only some of the enemies were actually showing up in the OAM table. It turns out that the NES has a limit to the number of sprites it can show. Further, I believe it has an additional limit to the number of sprites it can draw on the same horizontal line. This is why many games will swap in and out enemies that are on the same line. This makes it seem like the characters are flickering.

Enemies in the background

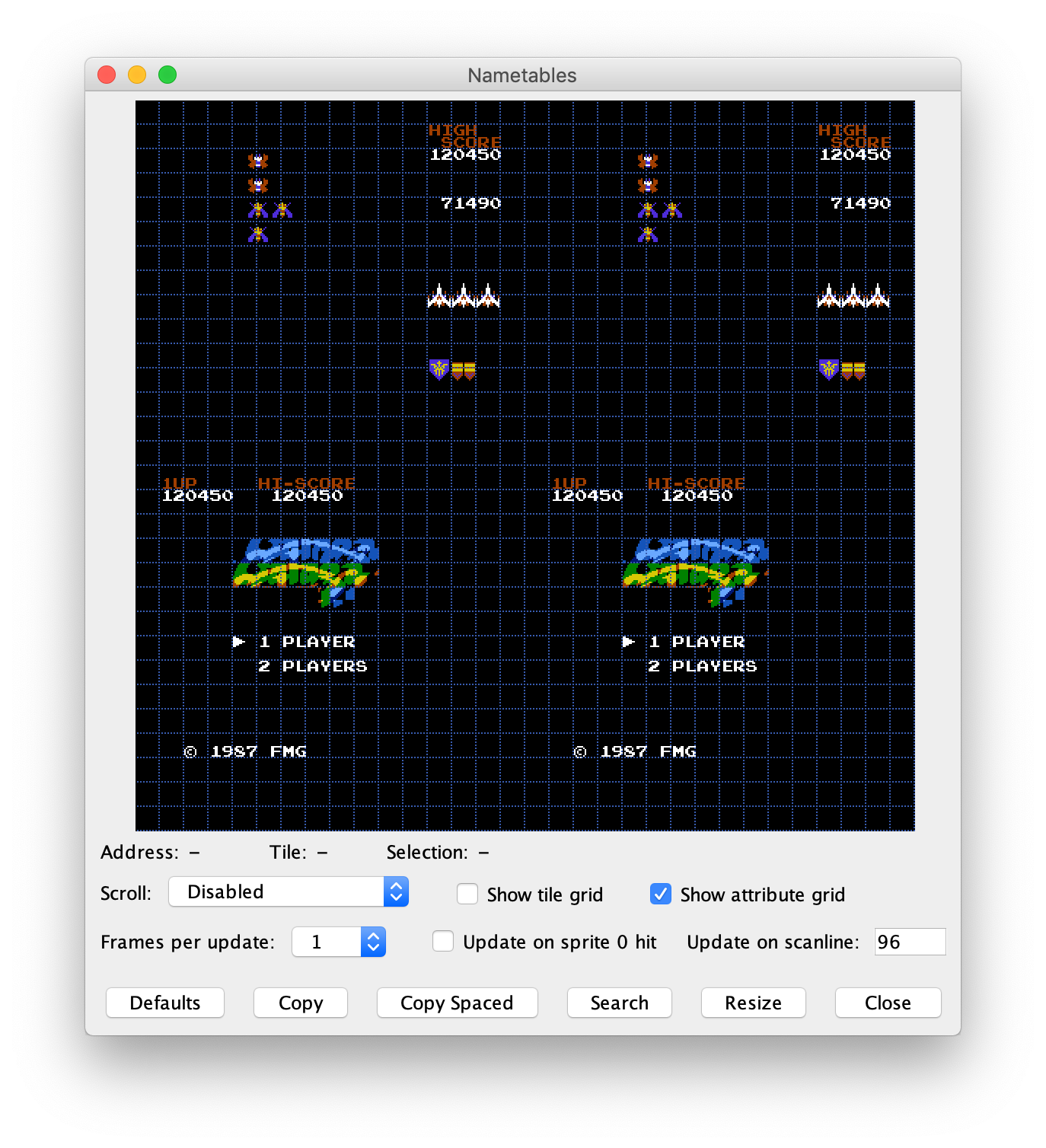

Galaga basically has two types of enemies: flying ones that can move around freely, and “patrolling” enemies that slowly flap around horizontally in Space Invaders style. The large number of horizontal enemies would make for a lot of flickering. To prevent this Galaga is actually drawing the patrolling enemies using background tiles. To find the patrolling enemies I had to look up the currently shown background tiles in the “nametables”.

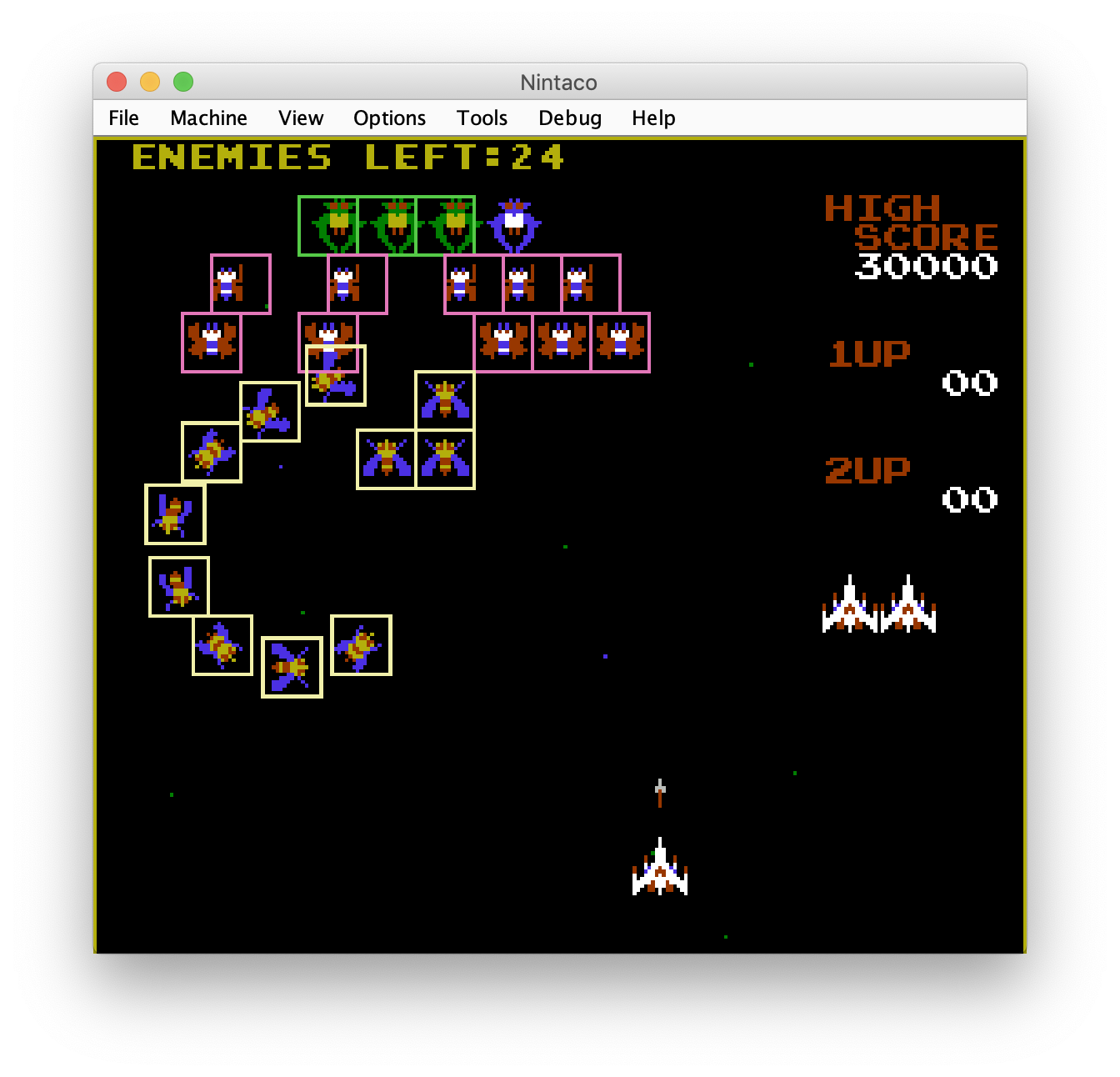

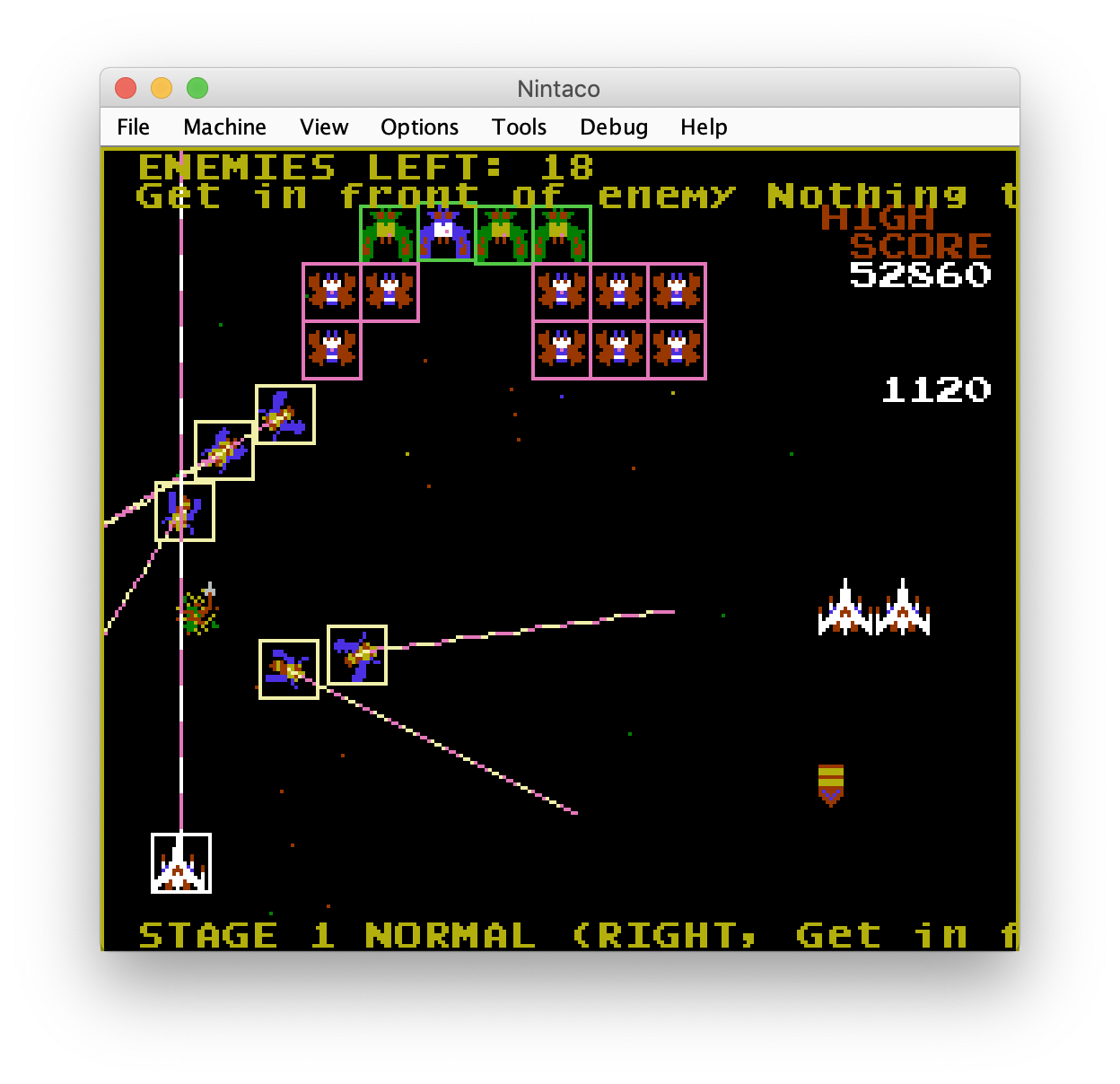

After figuring out how to read data from OAM and from the nametables, I had to do a lot of tedious work to pause the emulator for each frame and encode all the different tile indices used for all the enemies in their different rotations and for the background enemies. I also dug around some more in the Nintaco API and noticed it had a simple Canvas2D style drawing API that allowed me to draw boxes around the enemies. This allowed me to basically see what the bot was detecting in real time.

Matching enemies

Now my bot was able to see enemies, but since the bot was only seeing a list of current locations for each frame there was no simple way for the bot to know that the yellow fly in the current frame was the same as the yellow fly that had moved 1 pixel since the last frame.

I had to code up an algorithm that tried to guess which enemy was the same between the frames. In short it basically limits the search to only enemies of the same type as the one being matched, filtering out the enemies that are too far away to possibly be the same, and then choosing the closest of the remaining ones. This simple algorithm worked rather well and allowed me to start working on movement prediction.

Predicting movements

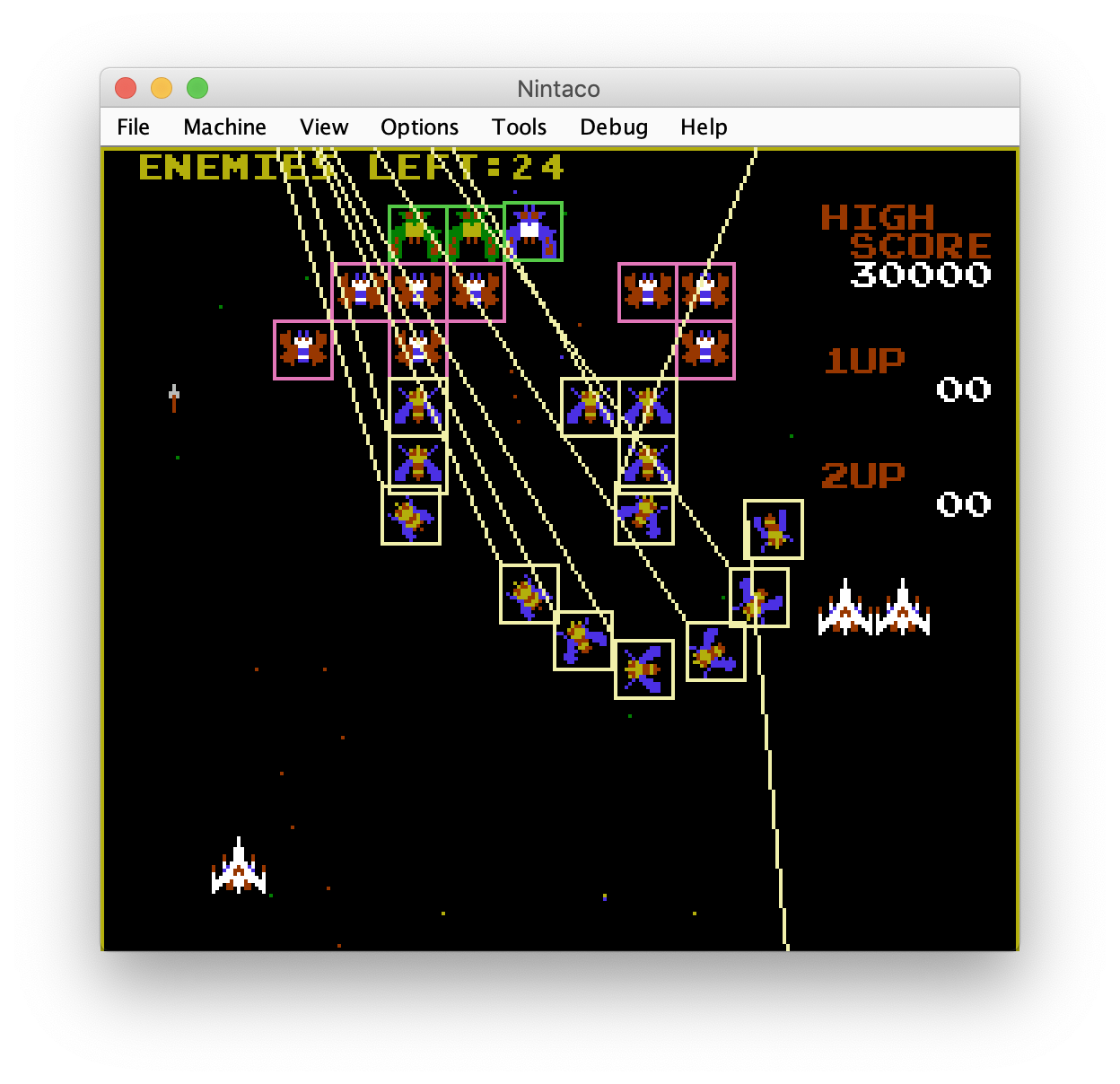

The player shots in Galaga are not incredibly fast. In order to hit enemies you basically have to predict a few frames ahead where they will move. My bot was going to have to do this kind of prediction as well. I started by checking where a sprite was located a few frames ago compared to the current frame. Then I connected these two positions with a line and extrapolated that line out in the same direction from the current position.

After a bit of debugging I ended up with a movement prediction algorithm that was good enough to actually hit enemies sometimes. I recall that my initial stupid implementation was just to predict a line going straight up from the players ship and shoot if that “shot line” was intersecting with an enemy’s predicted movement line.

The accuracy of the player’s shots was still quite terrible though. I decided to split up the prediction lines into segments representing the amount of movement in a single frame. I could then render the prediction lines as segmented lines to see what the bot was prediction.

This allowed me to further program the bot to shoot if the player’s shot line was intersecting an enemy’s movement line at the same amount of frames into the future. This was still not perfect since the movement of the enemies is quite erratic, but it was a great improvement over the initial naive implementation.

The bot could also guess whether it had to move left or right to get closer to the kill zone where the prediction lines intersected in the same frame.

At this point I also printed some more debug information to the screen to figure out what decisions the bot was taking.

Playing safer

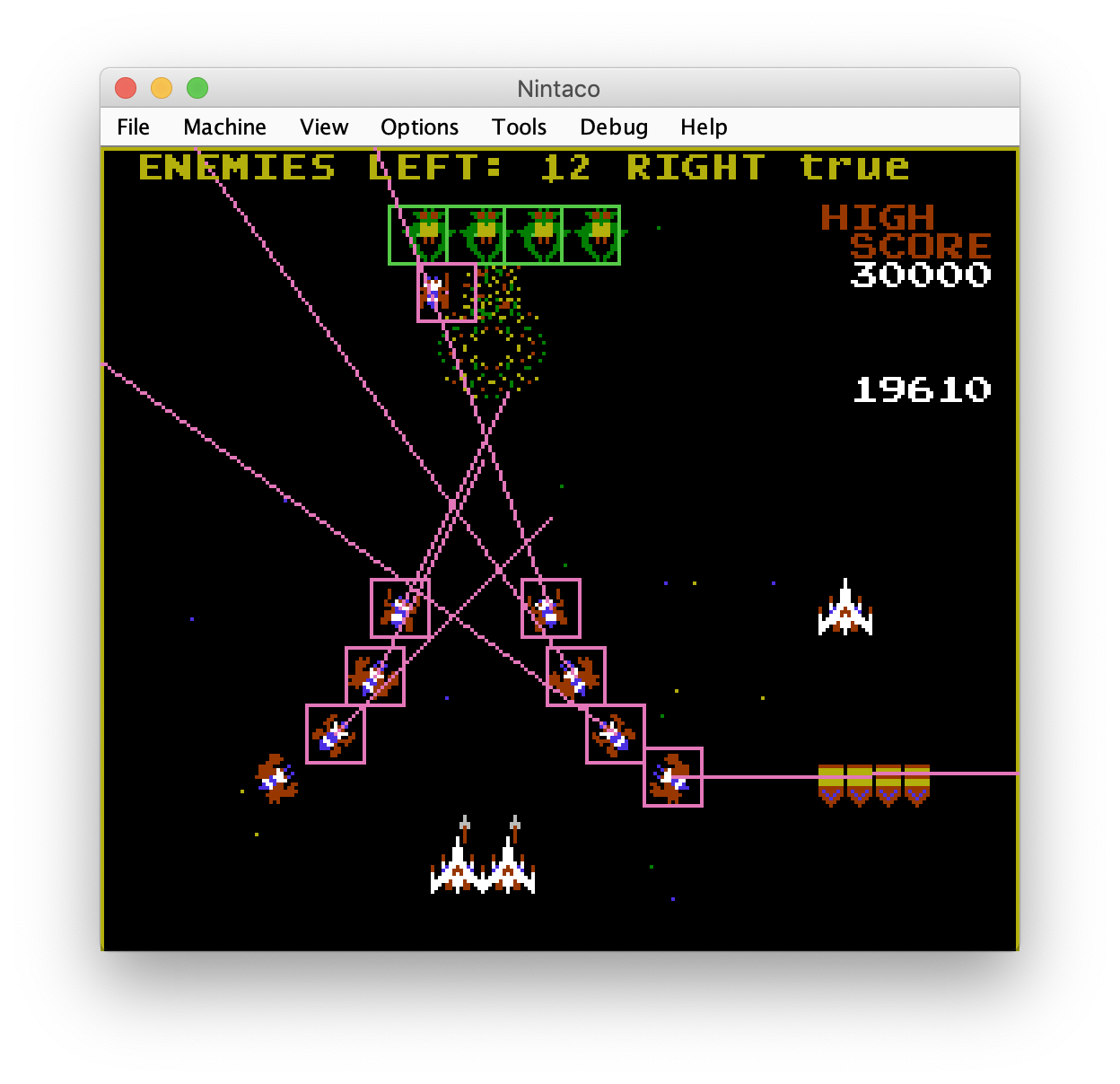

By implementing my prediction algorithm I was also able to figure out when the player’s ship was about to get hit by either an enemy or a bullet and try to get out of the way. For this I also started with a rather stupid implementation that was just trying to blindly escape.

However, the bot was easily getting cornered and doing other mistakes to get killed in stupid ways. The next step I came up with to improve survivability was to implement a concept of “danger zones”. If an enemy or bullet was about to cross the player’s line within a set number of frames, that area was marked as a danger zone and the bot would try to move away ahead of time.

The danger zone algorithm also involved some complications like trying to prevent the bot from getting cornered at the right and left sides of the screen and joining adjacent danger zones together if they were close enough to prevent the bot from trying to move between two danger zones if the gap between them would be too small for the player to fit.

Results

In the end I had worked for a couple of hours daily for a little over a week and the bot reached a high score of around 170k. The bot was still doing a lot of stupid things, but sometimes it could be quite exhilarating to watch it bravely battle through a rain of enemies and bullets. I recorded a video around new year’s eve 2018 showing the bot in action. I’ve done quite a few bug fixes and tweaks since this video was made but it is still quite fun to watch.

In the end there is nothing really fancy going on here. It all comes down to some tedious work to get the detection to work, some simple high school (or maybe lower) math and some heuristics based on trial-and-error.

Regrets and further work

The bot still makes some terrible decisions at times and I have some ideas for ways this project could be improved:

- The bot is absolutely terrible at the bonus stages. The bonus stages contribute greatly to the final score. However the movement patterns on the bonus stages are so unpredictable that they would probably have to be hardcoded. I was more interested in just trying to make the bot survive for as long as it can so I didn’t spend much time on this issue.

- Another scoring feature of the game is that the green/blue boss enemies at the top of the screen will sometimes fly down to capture the player with their tractor beam. If the player gets captured and destroys the boss when it comes flying down again, the captured player can be freed and you can control two ships at the same time. This gives you double firepower but also increases your hit box. Currently the bot only detects the tractor beam but doesn’t use that information. Instead the bot will sometimes randomly get captured and save the ship. Then it will either use that to obliterate a lot of enemies skillfully and quickly or just die a pathetic death almost immediately. This logic could obviously be improved.

- The concept of danger zones sounds similar to an existing AI feature that is used in robotics called artificial potential fields. I heard about this feature on the cool AI and Games Youtube channel but didn’t have time to try implementing this feature yet.

- Another potential solution to explore could be to predict the game some number of frames ahead (like a typical chess playing AI would do). I think this might help the bot to avoid getting cornered as easily when it gets to stages 15 and beyond, where the enemies start getting more aggressive and increase their rates of fire.

- While the current linear movement prediction works quite well (especially when the enemies are closer to the bottom of the screen), it still has many flaws. It does not take into account that the enemies usually fly in a rounded path. A more improved extrapolation algorithm might help here. It also cannot deal with sudden changes in movement path. Here maybe some sort of Machine Learning based approach might help the bot to predict more accurately.

- A fun way to try to improve the bot is to use save states to save the game at the start of every stage until the bot dies. Then you can load that state and replay it over and over to try to come up with some idea for what logic could have saved the bot from making that mistake. You can also use the emulator’s turbo feature (press tab to toggle) to skip through the first stages quickly.